Google's algorithm | Weboptim

Where are the days when just typing a few keywords into your website and then placing a few links could get your website to the top of the search results? Google's ranking algorithm is getting more and more sophisticated every year.

At each stage in the development of Search, new milestones have been set.

Among other things, Google has become the world's top search engine because it uses its "PageRank" algorithm to monitor the network of links to websites as a voting network. This was the first major milestone. It was then that "Social signals", i.e. taking into account human likes and dislikes when ranking, came into play. This specifically means social shares and likes, whether you look at Facebook, Google+ or Twitter.

And where are we today? What is important in Google's eyes today?

Main policies and how they affect us:

It is still important that the user gets what they are looking for and is presented with a good service, i.e. a website. This, of course, is coupled with learning algorithms that monitor and measure our habits and deliver results accordingly.

Fighting webspam:

The aim is to promote pages with quality content and to eliminate worthless low-quality (junk) content from the results.

Maintaining a high level of user experience:

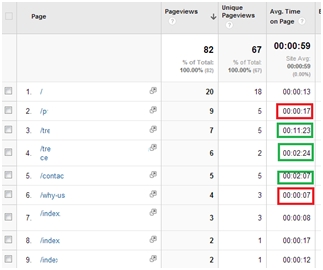

1. Monitor the use of the results list

That is, if you search for a keyword, there is the list of results. From that list, we select a web page and click on it. From there it measures how long it takes us to return, if ever, to the hit list. If we return soon? Obviously we didn't find what we were looking for on that page. So the results were not satisfactory, so in the long run these websites will not compete. Of course, the law of large numbers applies, one visitor's dissatisfaction will not ruin our business.

Solution: A good quality website should be created in all aspects, both in content and design. Because it is useless to do search engine optimisation if you want to push a website forward that is not well appreciated by users. This is why search engine optimisation as an industry no longer exists today. It has been expanded to include content marketing, ergonomics, social marketing, etc. Because it is the combination of all these that gives really good results.

2. CTR (click-through rate) of the search results

For the hit list, it is not only the click-through rate that counts, but also the click-through rate. So the Click-through rate (CTR). If the click-through rate is low, it also tells Big Brother that there is something wrong with that hit.

Solution:If we get to the point where we at least pay attention to this value, or try to estimate it, that's a big step forward. A concrete solution is to test which wording performs best and include it in the list. A good text can work wonders here.

3. Google also analyses website usage

Once on the website, the user experience can be measured while managing the website. In page loads, time on page, conversion numbers, or even purchases. These all have an impact on our Google ranking. This makes our online strategy extremely complex. Fortunately, we have tools to prepare for these.

Solution: Analyse your website frequently to identify its weaknesses and strengthen them. Users are the main judge of a piece of content. We just have to pay attention to them and correct the mistakes in time. That's what Google Analytics analysis is good for. The data is there, we just need to see between the lines.

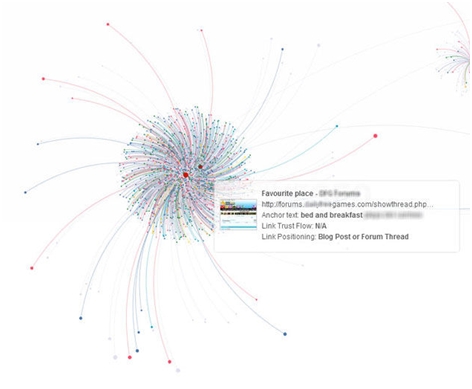

4. Links and link texts

From the very beginning, the quality of our link profile matters in optimisation. Obviously, as in life, the more people who say we are good, i.e. vote for us with a link, the better. But, just like in life, it doesn't matter who says it. If the most famous authority on the subject says that we are good, that is much better than if the shopkeeper says the same. Because there is a difference in expertise between the two on the subject in question. So does Google. That's why it says "Relevance" above all else. Consequently, links from sites that are close to our topic are stronger. That is, they provide relevant links. In addition, the measurement of relevance until May 2013 (Google Penguin 2.0) was quite attached to the link text, to the few words that the link promised. For example, the link text in the previous sentence is Google Penguin was.

Which was overturned in 2013: The unnatural links have been devalued.

Google puts a strong emphasis on naturalness in its new algorithm, i.e. Voluntary Link Propagation. You can judge this from link text and Brand usage. Brands that are prevalent online will now find it much easier to stay at the top of the listings.

Many people have fallen victim to this change

Solution:Link profile analysis should be implemented and identify what the weaknesses are and how to improve them. One of the possible tools for improvement that Google has produced is called: Disavow tool

This tool is for removing links from your link profile. One thing I would like to say about its use: Be careful :-), because this tool can also be used to ban useful links.

5. Content

The content should be of high quality and useful to the user. The strengthening of this factor implies the need to develop content for anyone who wants to stay competitive. And content that is consumed by the user. Many people are asking themselves, but what can I write on my website? What can I write about a service that everyone knows? This is the task of content strategy planning, as it is known today.

We can create a lot of content that is good for users, we just need to change our approach. Stop trying to sell and start thinking about what WE can give the user. What they really need. And if we think about it in this way, we will find out what we need to do.

Content development consists of 4 steps:

- Find out what your target audience needs

- Create the content

- Let's get the word out so people know about it

- And make sure the content is fresh

6. Trust index! Trust Rank!

Google is fighting against spam and measures this with a so-called Trust rank. The logic behind this is that they have observed that the closer a website is to a trusted website, the less likely it is to contain spam. In fact, the man about man theory is shown here, only here, the distance is determined by link bounces. The more links and web pages that take you to your destination, the more likely you are to find junk web pages there.

So they have selected websites with 100% trust, which will definitely not show any junk. For example, the BBC site. Taking these as a starting point, the question is how far our site is from these trusted sites. If we are close to the "fire" we have a higher trust index. If not, we are not. It is plausible, as is the way the algorithm works. Think about it, if users don't like our website, it won't be at the top of the listings for a long time.

7. Website speed

Perhaps many people don't realise how important this one characteristic is. With just one website acceleration, you can achieve an organic traffic increase of up to 20-50% in a good case. Of course for sites where this is the problem, i.e. they are currently slow. Slow is defined as sites with a loading time slower than 3-4 seconds.

But speed matters not just because Google ranks us lower, it also affects purchases, or conversions. Measurements show that every second of delay causes a drop in conversions.

Check your website speed with Google's tool!

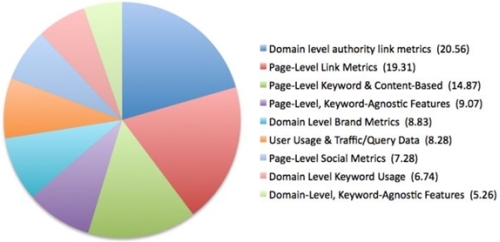

Summary:

A summary chart of the main ranking criteria:

Source: moz.com

Other interesting facts:

Link building strategies >>

What is Long Click? Google brother measures your clicks! >>

Linkedin: http://www.linkedin.com/in/zoltanpongracz

Our main references over the last 10 years >>

If you like the article 😉