Possible reasons why Google is not indexing your website | Weboptim

In order for a website to appear in organic search results, Google needs to index the site. If this doesn't happen, tomorrow is lost - no one can find it.

That's why it's important to check that your site doesn't contain any elements that could cause Google indexing to fail.

Let's look at the most common causes:

1. 'www.' vs. 'no www.' version

Technically, the 'www' version is a subdomain. So the tesco.hu and the www.tesco.hu are not the same page, even if we can't tell the difference.

In order to make sure that Google indexes both of them correctly, let's take up both websites with In Google Webmaster tools. Set which domain should be the preferred domain.

2. Google has not found the page yet

A common problem can be new websites case. Wait a few days, but if you find that Google is still not indexing it, make sure that the sitemaphas been uploaded and is working properly. If we have not created such a sitemap, this may be the problem.

Alternatively, we can ask Google to map our site. The steps to do this can be found in the Google support in parts.

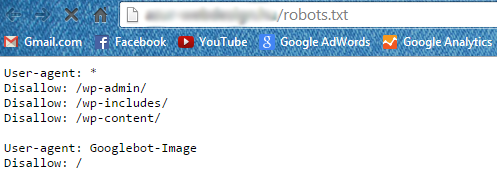

3. The page is blocked by robots.txt

A robots.txt file located in the root directory of websites; its function is to control what information search engines can access on the website.

The format is fixed, always containing 2 lines:

- User agent: here you have to enter the name of the robot, e.g.: Googlebot (ha *-is displayed, the file applies to all robots)

- Disallow: and here what we want to exclude from indexing, e.g.: /images/

A common mistake is for the developer or editor to block the page using robots.txt.

This is easy to remove, just delete the robots.txt file.

4. The page does not contain a sitemap.xml file

A sitemap our website list of pages, which helps Google and other search engines understand your website content. Google can also track it when the page is indexed.

The following cases can be very useful to use:

- our website too big - the search engine would find it difficult to see through

- many our archived content or the contents are not linked to each other

- our website is new and therefore not yet few external linkscontains

- many media we have content (images, video, Google News)

Even if you don't have any of the above on your website, it may still be worth uploading to help Google. Simple to prepare task, whether online can also be done.

5. There are mapping errors

In some cases, Google does not index some subpages because it sees them, but you can't map them for something. You can find a list of such sites on Google Webmaster tools in the section on mapping errors.

6. Lots of duplicate content

Duplicate content is when several different pages (URLs) provides exactly the same content. Too much repetitive content can confuse search engines, which will stop indexing it. The problem is simple to solve 301 redirection with the help of. We can use canonical tag-but that's a slightly more complicated solution.

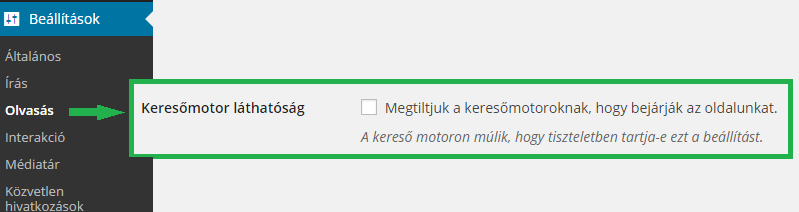

7. Privacy settings are turned on

If WordPress website you operate, you should check your privacy settings. We may have turned on a feature that prevents indexing.

8. The page is blocked by the .htaccess file

A .htaccess is an integral part of the website, located on the server. It allows the site to be accessible on the World Wide Web. Although its use is practical and useful, it can easily be used to block bots, which prevents indexing.

9. Ajax/Javascript problems

Google indexes your JavaScript and Ajax files but you can't crawl them as easily as you can HTML data. Therefore, if you configure these types of pages incorrectly, search engines will not be able to index them.

10. Slow page load time

Google does not prefer it if your website takes a long time to load. If the bots perceive that the page is loading indefinitely, they will most likely not index it.

11. Hosting barriers

If the robot cannot access the page, you cannot index it either.

When can this happen?

If the host has frequent outages, robots may not be able to reach the site. Check the connections.

12. Deindex status

If your website has a dubious history, you may have a latent penalty prevents crawling. If your website has already received a penalty and you have have been removed from indexing, can only get back with a lot of hard work.

Summary

Indexing is a key factor for SEO. If your website or part of it is not crawled, be sure to check why it might be.

Source: searchenginejournal.com